How I Won the Best Predictive Model Challenge at the Applied Machine Learning Conference

A few weeks ago, I saw a tweet from Data Science Renee about the Open Data Challenge associated with the Applied Machine Learning Conference (part of the TomTom Founders Festival in Charlottesville, VA). I took a peek at the data and made two judgment calls: (1) that it wouldn’t take me too long to develop a model for the challenge and (2) that I might have a chance at winning.

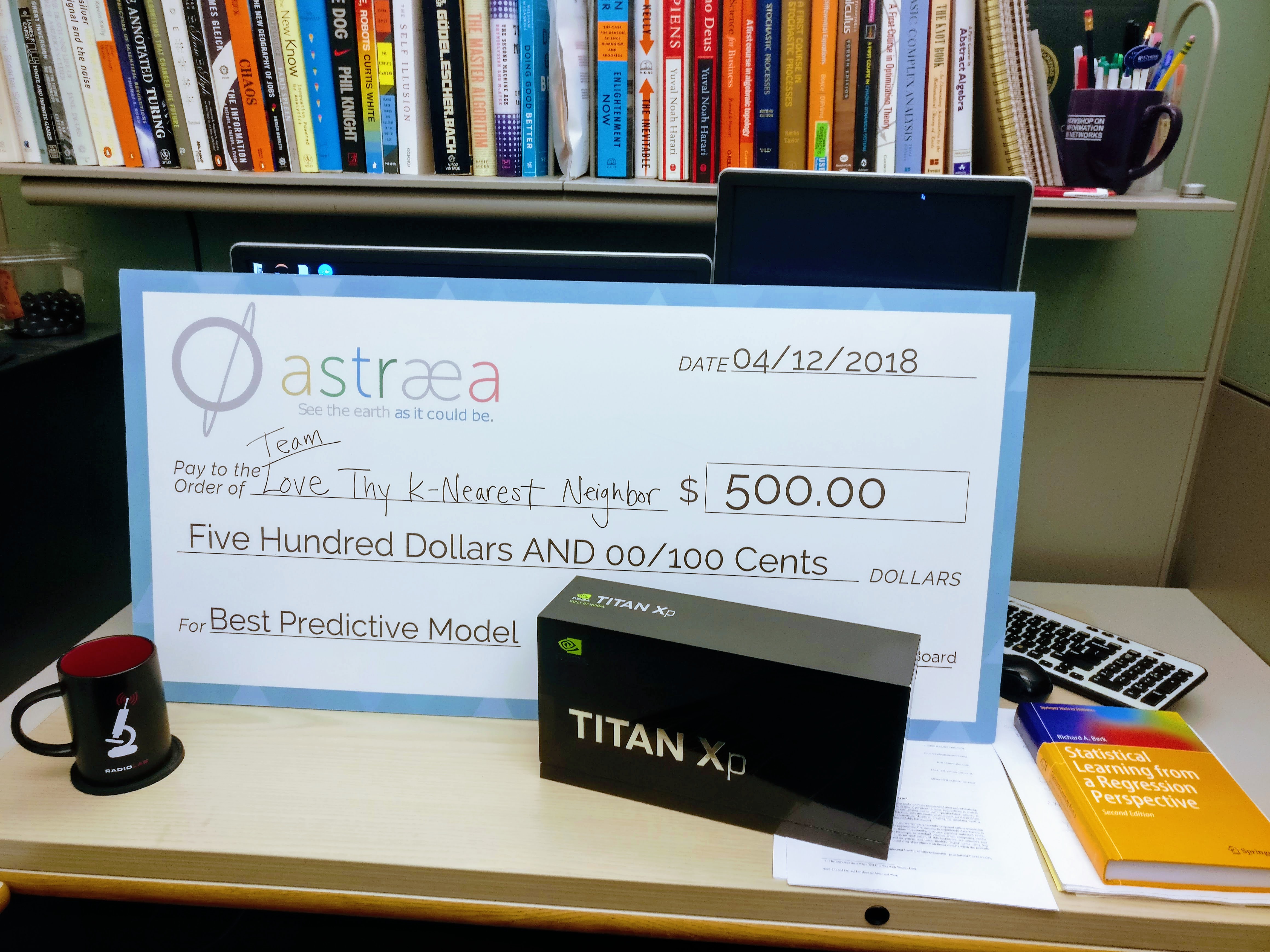

While I was terribly wrong about the first of these judgments, the second one turned out to be true. Last week, I made a quick trip down to the AMLC in Charlottesville, where I accepted a pretty cool prize for submitting the best predictive model (giant checks FTW) and gave a 3 minute overview of my modeling methodology (slides for that presentation here). Since 3 minutes was barely enough time to sneeze, I wanted to write a post with some more detail about the model I used to win the challenge. If you want to look at my code directly, I posted my files on GitHub here; I also made a Jupyter notebook walkthrough that provides a little more hands-on description of the material covered in this write-up.

Context and Problem Setup

Conveniently, there was a “best data storytelling” part of the Open Data Challenge. So rather than doing a deep dive about the context of the data here, I am going to point you toward the winning entry for that part of the challenge (congratulations to Team Hack’d).

For the purposes of the predictive part of the challenege, we were given three different time series about the usage of the public WiFi networks at Charlottesville’s downtown mall: number of web clients (plotted below), number of web sessions, and total traffic that passed through the network. The first two of these were daily time series, while the last one was measured in 4-hour intervals. Our training data consisted of the time series data from January 1, 2017 through December 20, 2017; the challenege was to predict these three variables for the week of December 21-27, 2017. The objective of the challenge was to make predictions over this time period with the smallest mean absolute percentage error (MAPE):

\[\frac{100}{n} \sum_{i=1}^{n}\left| \frac{y_i - \hat{y}_i}{y_i} \right|\]My Predictors

Your model is only as good as your predictors, so my first task was to find a set of variables that would be correlated with public WiFi usage in downtown Charlottesville. While we were encouraged to look through C-ville’s new open data portal, I didn’t find anything that struck me as being super predictive of downtown pedestrian traffic. I thought about using parking ticket data, but even then—if you think hard enough about it—there is a lot of room between parking ticket incidence and pedestrian WiFi usage. Given that I didn’t feel like spending all my time figuring out to build a geo-query to find nearby parking tickets, I gave up on the open data portal fairly early on.

This left me with the rest of the internet to scour for variables that would help my cause. After searching around, these were the ones that I ultimately came up with:

- Calendar data: Including features like day of the week are pretty standard in time series data; but my intuition was that other factors like federal holidays and season of the year would be important factors to include in my model. One unique challenge with this dataset is that Christmas was in the test set. With less than one calendar year of data though, there was no way to see learn what traffic was like on previous Christmases. My solution to this was to treat Thanksgiving as a special training data point, using the logic that pedestrian traffic around the day of Thanksgiving would probably be similar to pedestrian traffic around the day of Christmas. All told, these were the variables I included:

- Day of week indicators

- Quarter indicators

- Federal holiday indicators

- Specific indicators for the day before, day of, and day after Thanksgiving and Christmas

- Weather data: This is a no-brainer. Weather and pedestrian traffic are clearly correlated, espeically in downtown urban areas. To get this data, I used Google Sheets ImportHTML function to directly import Weather Underground’s historical weather data into a spreadsheet. Since so much of the weather variables are highly correlated, I chose a handful that I thought would most influence traffic:

- Daily High Temperature (and its square)

- Daily Precipitation (and its sqaure)

- Daily Low Humidity (and its square)

- Indicator for thunderstorms

Weather data caveat: Our models were forbidden from using “realized” data, which means I couldn’t use the actually observed weather as predictors on the test data. I spent way too long trying to find forecasted weather data at the last date in my training data, before ultimately giving up (if you know how to get historical forecasts—not actual weather!—let me know, because I have to believe there is a solution to this problem). In the end, I just used the averages for all these predictors in the 2 weeks leading up to my test period as the values to make my final predictions. This means my weather predictors were identical for all 7 days in the test period, but this turned out not to hurt me too much.

- Local events data: I had never been to Charlottesville before this year’s AMLC, but I surmised that the downtown mall is a fairly important venue for local events (festivals, farmer’s markets, etc.). After poking around some municipal websites, I found an events calendar that listed the name/date of dozens of events at the mall throughout the year. I wrote a small scraper to fetch this data and organize it into a CSV. Also, I noticed that some of the events in the test period were recurring events throughout the month of December (the “Holiday Market” and the “Gingerbread Express”). Assuming turnout to these events would be somewhat similar in training/test periods, I added extra dummy variables to capture this effect:

- Community event indicator

- Community event count (just the total number of events that day)

- Indicators for “Holiday Market” and “Gingerbread Express”

- UVA basketball schedule: College basketball is one of my favorite sports to watch and the UVA Caviliers (based in Charlottesville) were very highly ranked for much of 2017 (sometimes as the number 1 team in the country). From what I could tell, there were a number of restaurants and sports bars in the vicinity of the downtown mall. I figured that nights when UVA was playing a basketball would attract some extra visitors to the downtown area. Again, I used Google Sheets to quickly scrape ESPN’s basketball schedule for UVA’s 2016-2017 and 2017-2018 seasons. The variables I ended up including were:

- Basketball game indicator

- Home/away game indicator

- Whether opponent was also a ranked team

- Number of cumulative games played up to that point (since games toward the end of the season are more exciting than those at the beginning)

- Lag variable: It’s often the case in time series data that the best predictor for today is what happened yesterday. Since we had a 7-day test period, I couldn’t directly use a single day lag variable, but I could use a one week lag (i.e., simply include the outcome variable from the week prior as a predictor for the current week.

- 7-day lagged dependent variable

Model Exploration

Since we only had around 350 total days of training data, my first inclination was to use a simple linear model. While fancy methods like neural networks have received a lot of attention lately, I knew from experience that deep learning models really only show their true value when trained on massive amounts of data. Andrew Ng gave a talk on this subject with a slide that highlights what makes deep learning so powerful in the presence of big data (below). If you invert the argument, it implies NN’s aren’t really expected to perform any better than simpler models when trained on small data. This is not an exact analogy, but choosing a model with explicit functional form is a little bit like having a strong prior (in the Bayesian sense).

That being said, given how easy it is to train fancy models with modern software, I wanted to at least try a model with lots of flexibility. I ended up trying a random regression forest, since I’ve had success training random forests in the past. During my model exploration phase, I randomly split my training data 50-50. After spending some time optimizing both the random forest model and my simple linear model on half the data, I evaluated their out-of-sample prediction error on the holdout data. While it wasn’t a big signal to go on, my linear model ended up out beating the random forest by 1 percentage point. Ultimately, I fell back on my intuition about leaning on my “prior” when training on such a small dataset, and decided to move forward with the linear model.

In the end, I trained a linear model on the predictors described above. I also included a few interaction terms between the fourth quarter indicator and the weather variables and lag variable. I did this mainly because I could tell from eye-balling the time series (shown above) that there was less volatility in the last quarter, which suggested that the weather and lag variables might have a differential impact on predictions toward the end of the year. The functional form of my model ended up being nothing more complicated than your typical linear regression function:

\[y_i = X_i\beta\]My Tricks

It’s quite easy to get distracted with lots of fun code and fancy models, but at the end of the day, this challenge had a very clear goal: minimize your prediction error on the 7 specific days in the training period. So while my model itself is quite simple, there were a few “tricks” that I used to make sure that it would perform well on this specific task.

Trick 1: Directly minimizing the MAPE function

This isn’t so much of a “trick” as it is good practice in machine learning generally: make sure you are optimizing the right objective function. While I could have trained an ordinary least squares regression model on my data, I knew that the evaluation criteria being used to assess accuracy was mean absolute percentage error (not squared error!). MAPE has some odd behavior, since the same absolute error has dramatically different impacts depending on the underlying true value. Being off by 10 when the true value is 100 gives you a APE of 10%; being off by 10 if the true value of 5 gives you an APE of 200%!

In the end, I simply used a numerical solver to find the coefficients that directly minimized the MAPE of my linear model:

\[\hat{\beta} = \displaystyle{\arg\min_{\beta}} \frac{100}{n} \sum_{i=1}^{n}\left| \frac{y_i - X_i\beta}{y_i} \right|\]While it sounds simple in theory, this process was much more complicated than I anticipated at first. I couldn’t find any off-the-shelf code to do this for me, so I ended up having to write my own model classes. I’ve broken out how to do this in a separate blog post, dedicated specifically to the task of optimizing linear models with custom objective functions.

Trick 2: Sample Weighting

Since my goal was specifically to minimize my prediction error on the days between December 21-27, I knew that getting great predictions in the middle of August wouldn’t be that useful to me. Fortunately, there’s an easy way to tell statistical models which points of your data are most important: sample weighting. I played around with a few different weighting schemes until I found one that looked reasonable to my naked eye. I settled on an exponentially-decaying weight system, in which the very last week in my dataset was given a weight of 2 and each week before that was given a percentage of the previous value until I leveled the weights off at 1.

Additionally, recall earlier how I said I wanted to use Thanksgiving as a trial run for Christmas in my data. This prompted me to manually set the weight for the week of Thanksgiving to 2, so my model would be incentivized to get predictions around the holiday period as close as possible. You can see the weights I used throughout the whole year graphed below:

Trick 3: Getting the Hourly Data Right

I needed to make predictions for three separate time series: number of clients, number of sessions, and total traffic. While the first two of these series were at the daily level, the traffic series was provided for every 4-hour interval throughout the year. Not only did this increase the volatility of that series, but it also meant that it was going to have an out-sized influence on the model evaluation.

This is because the distribution of a metric like absolute percentage error is highly skewed. (A sample histogram of APE values from one of my models is plotted below.) When you average a skewed distribution, outliers in the tail have a large influence over the result. And when you sample from a skewed distribution, the probability of getting values in the tail increases with the size of your sample. The fact that the clients and sessions time series had 7 points in the test period and the traffic series had $7 \times (24/4) = 42$ points in the test period meant that the traffic model was going to have an out-sized impact on my aggregate performance. This caused me to spend some extra time tuning this particular model.

At first, I decided to fit the traffic data much like the other series: I simply used the data provided in 4-hour segments with the addition of dummy variables to indicate which hour of the day it was. That is, my input data had the following form:

traffic_hourly.loc[0:10, ["datetime", "hour", "total_traffic"]]

| datetime | hour | total_traffic | |

|---|---|---|---|

| 0 | 2017-01-01 02:00:00 | 2 | 52117.617780 |

| 1 | 2017-01-01 06:00:00 | 6 | 243.484444 |

| 2 | 2017-01-01 10:00:00 | 10 | 431200.142200 |

| 3 | 2017-01-01 14:00:00 | 14 | 179572.053300 |

| 4 | 2017-01-01 18:00:00 | 18 | 137284.835600 |

| 5 | 2017-01-01 22:00:00 | 22 | 195148.231100 |

| 6 | 2017-01-02 02:00:00 | 2 | 44.942222 |

| 7 | 2017-01-02 06:00:00 | 6 | 7420.586667 |

| 8 | 2017-01-02 10:00:00 | 10 | 71663.502220 |

| 9 | 2017-01-02 14:00:00 | 14 | 281617.066700 |

| 10 | 2017-01-02 18:00:00 | 18 | 265055.573300 |

One problem I found with this model for the traffic data was that it had trouble fitting the peaks and troughs throughout each day. It seemed to be biased toward lower values and would get unexpected jumps in traffic horribly wrong (you can see this in the figure below). This prompted me to take a slightly different approach, which required three steps:

- Aggregate the traffic data for each day

- Fit a linear model on daily traffic

- Disaggregate my predictions for daily traffic into the hourly segments

For this last step, I simply calculated the average proportion of traffic that fell in each segment for each day of the week (averaged across the first 3 weeks in December). The first 10 rows of this data are below, where the hourly_proportion column adds up to 1 for each day.

hourly_proportions.set_index("day_of_week").head(n=10)

| hour | hourly_proportion | |

|---|---|---|

| day_of_week | ||

| sun | 2 | 0.009167 |

| sun | 6 | 0.038168 |

| sun | 10 | 0.152985 |

| sun | 14 | 0.406838 |

| sun | 18 | 0.280672 |

| sun | 22 | 0.112169 |

| mon | 2 | 0.001177 |

| mon | 6 | 0.005286 |

| mon | 10 | 0.134738 |

| mon | 14 | 0.458740 |

This allowed me to simply take the predictions from my daily model and allocate that total traffic into the hourly segments according to these proportions. While this didn’t make my model perfect, after plotting the hourly model against this daily model (shown below), I could clearly see that the daily model was doing a better job of reaching the peaks present in the underlying data. This passed the eyeball test, so I decided to go with the daily model for my final submission. (A convenient side-effect of choosing the daily model for my traffic data was that all three models were essentially identical from a training/data management perspective.)

Putting it all together

After getting all these pieces in place, the last step was to train my final models and make my test predictions. As mentioned above, I used sample weights in my loss function. I also incorporated L2 regularization on my model coefficients to help prevent overfitting. (This step probably didn’t make a huge difference in the challenge; my guess is I would have won without any regularization.) This required me to do some cross-validation to find the optimal regularization penalty (again, see my companion post for technical details). In the end, my final model parameters were trained by taking the $\lambda$ values found from 10-fold cross-validation and minimizing the following objective function:

\[y_i = X_i\beta\] \[\hat{\beta} = \displaystyle{ \arg\min_{\beta} 100 \left( \sum_{i=1}^N w_i \right)^{-1} \sum_{i=1}^N w_i \left| \frac{y_i - X_i\beta}{y_i} \right| + \lambda \sum_{k=1}^K \beta_k^2 }\]My in-sample training error for each of the models was between 25%-30%. After I got my results back from the challenge organizers, it turned out my test error was near 36%. (I was glad to see this figure wasn’t way out of sync with my training values.)

Wrapping up

To conclude, I think there are few important lessons that I took away from this challenge:

- You don’t always need a fancy model

- Always optimize the right objective function

- Exercise discipline during the model exploration phase by holding out a chunk of data and not peeking at it until you are ready to make your final model choice

- Above all, use common sense. Making sure my traffic model passed the eye-ball test (i.e., that my predictions actually looked like the underlying data) was a really important goal of mine. Also, realizing that Christmas was in my test set and would probably exhibit some odd behavior is not something statistics could have told me.

I want to give a big thanks to Astraea Inc. and the Applied Machine Learning Conference for putting this challenge on. While it took way longer than I had anticipated, it was a great experience that ended up paying off in the end!

Alex Miller is a scientist, developer, and former academic. Feel free to connect with him on LinkedIn.